By John Etchemendy, Provost, Stanford University

Explore Keywords

competitiveness crisis

curriculum human capital

Academically Adrift

Josipa Roksa

preparation undergraduate

value jobs

aid crisis debt

employers funding grants

human capital loans

cost

Master Plan

nonprofit scholarships tuition

value wage premium

aid cost crisis funding

American Dream

human capital

debt

investment loans

Master Plan

scholarships tuition value wage premium

“brain drain”

cost human capital

employers

knowledge economy

loans preparation

wage premium

competitiveness

curriculum

human capital

Josipa Roksa

jobs preparation

undergraduate value

American Dream

competitiveness culture

curriculum employers

flexibility human capital

knowledge economy

information innovation

jobs knowledge-based

online preparation

technology

academic freedom cost

debt digital

flexibility individualize

MOOCs

information innovation

knowledge-based online

preparation teaching

technology value

competitiveness

crisis curriculum

human capital jobs

Richard Arum

Josipa Roksa

undergraduate value preparation

cost debt

employers

investment jobs

wage premium

knowledge economy

loans value

rewards tuition

undergraduate

©IPGGutenbergUKLtd/iStock/Thinkstock

Few of these articles take a deep or serious look at the reasons for this increase. Many reporters (and their editors) seem satisfied with superficial explanations that point to a lavish new dorm at one college or a new recreation center (with obligatory climbing wall) at another.1 They don’t stop to think how rare and recent these collegiate Taj Mahals really are, or what a tiny fraction of a university’s budget—which is dominated by salaries, not debt payments on a new rec center—they represent. Though they may make for an attention-grabbing story, they can hardly explain a 30-year trend that has affected costs at even the most pedestrian of college campuses.

In fact, the rate of increase in the cost of higher education for the past 30 years has exactly matched the rate of increase in the cost of dental services, legal services, and, for most of those years, physician services.2 What dentists, lawyers, and physicians have in common is that they are highly educated service providers whose industries have not yet been transformed by efficiency gains brought about by new technology. It still takes one dentist about 20 minutes to fill one tooth, and although the filling is probably far better than it was 30 years ago, the dentist still needs to be paid for that 20 minutes. Just so, it still takes one professor an hour to teach a one-hour class to 40 students. Sure, classes could all be doubled or tripled in size to achieve greater efficiency, but colleges, students, and parents all recognize that this entails a sacrifice in quality. Ironically, the most commonly cited measure of educational quality, student-faculty ratio, can also be viewed as a measure of inefficiency.

Every service industry that has not benefited from sweeping efficiency increases and that relies on highly educated service providers is subject to the same economic forces.3 And the result has been a similar, long-term cost profile in each of these industries. Fancy dorms and climbing walls are not the cause of the problem. Would that they were, since that would make the solution easy. As it is, finding a more efficient way to deliver a truly high-quality college education is extremely difficult, but is the only way to solve the cost crisis in higher education. Many promising experiments are now going on, including so-called “MOOCs,” massive open online courses, but it remains to be seen whether these or related technologies will yield high-quality substitutes for significant parts of the undergraduate curriculum.

Efficiency gains result from price competition, and U.S. colleges and universities, while highly competitive, have traditionally competed on the basis of quality, not price. This leads to fierce competition for high-quality faculty and creates pressure to decrease rather than increase the student-faculty ratio. It also leads to expansions of non-instructional staff to provide new and improved services to both students and faculty, and has pushed some campuses to upgrade their dorms and recreation centers—in a few cases, to extremes. But the key cause of the cost crisis is the basic economic fact described above. Unless we find a way to deliver a college education in a substantially more efficient fashion, its growth in cost will continue to outpace inflation.

There has also been a great deal of focus on the related issue of growing student debt. There is no question this is an increasing problem. But again, few articles have given it the careful treatment it deserves, opting instead to highlight stories of students borrowing over a hundred thousand dollars to finance their bachelor’s degree. But these examples are extremely rare. Indeed in 2007-08, the median debt nationwide of graduating seniors at non-profit colleges and universities was roughly $10,000 and 36 percent graduated with no debt at all. Thanks to financial aid, at Stanford, as at many of the “most expensive” universities in the country, the median debt of graduating seniors is zero (three-quarters graduate with no debt at all) and the average indebtedness of a graduating senior is less than $5,000.

The story is more sobering at for-profit colleges, where less than 10 percent of students have no debt when they graduate and 60 percent have debt of more than $30,000.4 Since these institutions disproportionately serve lower-income students, these levels of debt can leave their students in severe financial straits. Still, for-profit colleges educate a relatively small segment of the overall student population, though the segment is rapidly growing.

Many people were shocked when total student debt exceeded the nation’s cumulative credit card debt. This certainly deserves a story, but also warrants some thoughtful analysis. For example, not a single commentator has pointed out that this increase coincides with nationwide changes in consumer spending habits. College has long been an expense that families spread over many years. At one time, it was common to build college savings accounts in anticipation of eventual tuition bills. Now, college savings have become less common, only to be replaced by additional college debt.5 These are simply alternative strategies for spreading tuition costs over multiple years. And, like it or not, the substitution of debt for savings has become the preferred method for making most major purchases, whether of a refrigerator or a college education. Layaway plans and college savings accounts have given way to credit cards and college loans.

Most economists agree that, other things equal, a higher savings rate is healthier than increasing consumer debt. But setting that aside, how concerned should we be that educational debt now exceeds credit card debt? As consumer choices go, I could imagine much worse decisions than spending more on college education than on current consumption. At least college is an investment in the future.

None of this is to say that the cost of college and the magnitude of college debt are not real concerns. They are, and they pose a serious threat to the accessibility of college for an increasingly large portion of our populace. But no progress will be made on either issue without understanding what is really going on. As in medicine, treatments based on faulty diagnoses are often far worse than no treatment at all.

The value of a college education

The most deeply troubling charge leveled at U.S. colleges and universities concerns the value of the education they provide their students. It has long been assumed that a college education yields significant benefits both for the individual who receives the education and for the nation as a whole. It has become a platitude that a college degree is needed to successfully compete for a job in the so-called “knowledge economy,” and that national competitiveness increasingly depends on the proportion of our population who receive post-secondary educations.

But even this has been challenged in recent years. Some of the challenges are as superficial as articles attributing the college cost crisis to lavish dorms. For example, investor Peter Thiel generated great fanfare by launching a scholarship program that pays high school graduates not to go to college, but to launch businesses instead. This is based on his hunch that a college education does not improve one’s entrepreneurial skills, and so for these students would be a waste of time.

©Ingram Publishing/Thinkstock

Of far more concern are reports from employers that college students are graduating without the skills needed to succeed in the workplace. Now, a college education is not job training, at least in the narrow sense, and was never intended to be. But it most certainly should equip students with general knowledge, skills, and habits of mind that provide the foundation for productive employment. If colleges are failing at this basic task, then students and parents are right to ask whether they get their money’s worth from high tuition, and taxpayers are right to question whether the government should continue subsidizing student loans.

Of course, complaining about perceived or imagined failings of the younger generation is nothing new, so isolated reports from employers are hardly evidence that the college degree stands for less now than it did twenty, thirty, or fifty years ago. There have always been plenty of students who made it through college without much growth in practical skills to show for it. This prompted Henry Ford, as far back as 1934, to comment, “A man’s college and university degrees mean nothing to me until I see what he is able to do with them.” Ford was a great supporter of education, but as his remark shows, he did not view the degree as an iron-clad guarantee of anything.

Still, while anecdotal reports may not be good evidence, it is extremely important to ask whether American colleges and universities are producing graduates with the kinds of skills needed in the modern workplace. This is why few books about higher education have received as much attention, either in the popular press or among policymakers, as Academically Adrift: Limited Learning on College Campuses, by Richard Arum and Josipa Roksa.

In this book, Arum and Roksa present the results of an extensive study of students at a large and representative sample of U.S. colleges and universities. Based on these results, they argue that a large proportion of students at today’s colleges and universities show little or no improvement on the key reasoning and communication skills expected of a college graduate and demanded in today’s employment marketplace. They conclude that all too many students, as well as the colleges they attend, are “academically adrift.”

The picture Arum and Roksa paint is a sobering one:

The Collegiate Learning Assessment test, or “CLA” as it is widely known, is a standardized test designed to measure general competencies in critical thinking, analytical reasoning, problem solving, and writing. These general skills are widely considered by both employers and educators to be among the most important workplace skills, and enhancing them is universally recognized as one of the primary goals of a college education. The most widely cited conclusion drawn by Arum and Roksa from their data is that 45 percent of the undergraduates studied showed no measurable gains in these crucial skills.

This would be a devastating indictment of the higher education system in the U.S.—if it were correct. It turns out, though, that the evidence presented by Arum and Roksa falls far short of justifying the sweeping claims made in their book, as I will eventually explain. But before looking at the details of the study, it is important to pause and review some powerful, general reasons to approach their conclusion with a healthy degree of skepticism. Chief among those reasons are certain basic economic facts that are well known but rarely appreciated for what they show. The first of these is the wide and growing discrepancy between the earnings of college graduates and the earnings of those who do not go to college.

The college premium

We all know that, on average, college graduates earn more than those who do not go to college. Indeed, according to a recent study by Daron Acemoglu and David Autor, the college premium—the difference between the earnings of the average college graduate and the average high school (only) graduate—stands at record levels. They calculate that the “earnings of the average college graduate in 2008 exceeded those of the average high school graduate by 97 percent.”7 In other words, college graduates on average earn nearly twice as much as those who do not go to college. There is little question that this is the largest college premium in history, and certainly the widest gap since comparative wage data became available in the early 20th century.

Other authors, using very different methodologies, come to similar conclusions. For example, while the figure cited above averages the earnings of all college graduates, including those with advanced degrees, Carnevale, Rose, and Cheah estimate that the projected median lifetime earnings of those with a baccalaureate degree alone are 74 percent higher than the earnings of those with just a high school degree.8

It is important to ask whether American colleges and universities are producing graduates with the kind of skills needed in the modern workplace.

Perhaps more interesting, these authors find a college premium in almost every line of work, even those that do not require a college degree. For example, food service managers and retail salespersons—occupations open even to those with no high school diploma—benefit from a college education: in these professions, workers with a bachelor’s degree earn between 50 and 65 percent more than those with only a high school diploma. Other professions show more modest benefits from the college degree, such as stock clerks, waiters, and security guards. In these professions, the college premium ranges from 18 percent (stock clerks) to 45 percent (security guards). Rare is the occupation that exhibits no college premium at all: mail carriers, carpenters, and truck drivers are among the few lines of work where a college education does not, on average, increase an individual’s earnings.

In another study, Zaback, Carlson, and Crellin arrive at similar figures for the overall college premium, but also look at the premium for different college majors and in different states of the union.9 They find that the magnitude of the premium varies by major (a science and engineering major earns a 95 percent premium, while an arts and humanities major earns 55 percent), and by state (ranging from a 40 percent premium in South Dakota to 88 percent in California). But there is no combination of major and state that does not see a wage premium for a baccalaureate degree.10

If it is true that almost half of today’s students show “no measurable gains in general skills” as they proceed through college, then how can we account for the large and growing discrepancy between the incomes of those with and without a college degree? Why are employers paying so much more for employees who have graduated from college, even in lines of work where the degree is not a requirement for entry and even for majors that provide no directly relevant job training?

Of course, raw economic data do not prove causality, only correlation. But assuming we can rule out some kind of mass delusion on the part of the employers of America, there seem to be only two possibilities: The first is that employers are rewarding something other than skills that are gained or improved during college, perhaps general intelligence, persistence, or some other characteristic not substantially affected by the college experience. The only alternative is that employers pay the substantial wage premium at least in part for traits and skills that are acquired or honed during college.

Is college a passive filter?

Let’s consider the first possibility. Some people have claimed that the main benefit of a college degree is that it signals a set of abilities and traits that graduates bring to college, not characteristics they acquire while they are there. On this hypothesis, colleges play a primarily sorting or filtering role, and employers simply rely on this filter when they seek highly skilled workers. Employers are paying a premium not for how the college experience has molded or transformed prospective employees, but rather, so to speak, for the raw material, the preexisting traits that led to their original admission and eventual completion of the college degree.

This hypothesis does not stand up to scrutiny, for a number of reasons. Consider first the signal sent by college admission. College admission, at least to highly selective institutions, no doubt signals something about the individual admitted. Admission to a selective college is, after all, a filter. But employers could easily replicate this kind of filter themselves, by requiring that applicants supply the same material required by college admission offices—SAT scores, high school records, and so forth. For that matter, they could employ former ollege admission officers to assist in their hiring. Alternatively, they could begin recruiting freshmen who have a ready been admitted to a selective college, rather than waiting until they finish their degree. This is basically what professional sports leagues do, hiring players as soon as league rules allow.

The example of professional sports leagues is instructive. This is a case where employers are indeed primarily interested in traits the student athlete brings to college, rather than skills they acquire during their college experience. To be sure, college athletes further develop their athletic skills while playing at the college level. But for the most part, professional coaches are just as equipped as college coaches to give young athletes the necessary training and experience in their sport. The college degree itself, and the academic accomplishments it signifies, are irrelevant to their hiring decisions.

If the degree were simply a signal of traits students bring to college, not a mark of what they get while there, then at [highly selective] colleges, we should see employers recruiting students as soon as they are admitted.

And what is the consequence of this situation? Put simply, the frequency of college degrees among professional athletes is directly proportional to the restrictiveness of the league rules governing rookie hiring. Major League Baseball has the least restrictive rules, allowing recruitment directly out of high school. As a result, a total of 39 MLB players who played in a major league game last year—roughly 4 percent—had college degrees.11 The National Basketball Association is slightly more restrictive; its “one and done” rule allows recruitment after a single year of college play. Roughly 20 percent of NBA players have college degrees. The National Football League has the most restrictive rules, and also the highest proportion of college graduates among its players. Approximately half of NFL players have completed a baccalaureate degree.12

Although there is no source of data to prove or disprove this, it is probably the case that among professional athletes, the college premium is actually negative: those with a college degree very likely have lower salaries on average than those without. This would be a predictable result of the fact that the most prized and talented athletes are recruited out of college long before they have a chance to finish their degrees. Less talented athletes, those not lured by early, highly lucrative recruitment offers, have more time to complete their degrees.

If employers were primarily using college as a signal of general intelligence, plus perhaps the ambition to apply and get into a selective college, then we would expect to see much more hiring behavior like professional sports leagues. But such early recruitment is virtually unheard of in any other profession.

It is also important to recognize that the prior discussion assumes that college admission is selective. But in fact, most college students do not attend schools whose admission is highly selective, and the college premium that needs to be explained is not limited to alumni of those institutions. It measures the average wage benefit across graduates of all colleges and universities. So the hypothesis that employers are using college admission as a filter is doubly flawed: it does not stand up to scrutiny for selective colleges, and even if it did, most college admission is not highly selective.

Of course, it might be that the signal employers are looking for is not admission to college but the fact that the individual persisted in pursuing a four-year degree. This is another way in which college acts as a filter: graduates were not only admitted, they also stuck with the college project for four long years. This requires a certain level of ability, commitment, and motivation that would certainly interest most employers.

Now we should be careful to remember the hypothesis we are considering. Obviously, a bachelor’s degree does signal, among other things, the ambition and persistence required to finish the degree. There is no debate about that. The question is whether employers pay a premium only for a graduate’s preexisting character traits, and not for skills and traits that are developed and improved due to the college experience. In other words, is college more like the TV show Survivor, with the college degree awarded to those who are motivated and talented enough to complete a sequence of otherwise pointless tasks?

Again, both salary data and employer behavior suggest otherwise. For example, if the college premium were primarily rewarding character traits like diligence and tenacity, traits that may well be indicated by a college degree, then we would expect individuals who enter college but fail to complete a degree to suffer a penalty compared to those who complete high school but choose to enter the employment market immediately. After all, the former individuals have proven that they did not have the required diligence and tenacity to complete the college project on which they embarked. But in fact the data point in the opposite direction. Individuals who begin college but fail to complete any degree still enjoy a 20 percent wage premium above high school graduates who go directly into the work force. This is exactly what we would expect if the premium actually rewards skills improved during college, not the persistence required to finish a degree.13

Moreover, there are many other ways to demonstrate persistence and diligence. The most simple and obvious is to hold down a job for several years. Indeed, holding down a job is a significantly better signal of the ability to hold down a job in the future than having gotten through college. Other things equal, the more similar the evidence, the better the predictive power.

To the extent that a baccalaureate degree is simply a mark of diligence and persistence, we would expect employers to reward equally their more experienced employees. And yet the average high school graduate with five or even ten years of employment experience still does not earn close to what the average college graduate earns, even with little or no experience. This is hardly surprising. After all, an excellent high school record plus four years of diligent work experience does not qualify you for the kinds of jobs and pay levels open to those with a similar high school record plus a college degree. Yet that is exactly what we would expect if the pure “sorting and filtering” model were accurate.

Consider one final piece of evidence. A number of colleges and universities, particularly the most selective, have graduation rates well above 90 percent. Students admitted to these colleges not only have outstanding high school records, they are also virtually guaranteed to graduate. If the college degree were simply a signal of abilities and traits students bring to college, not a mark of what they get while there, then at least at these colleges, we should see employers recruiting students as soon as they are admitted. They have already demonstrated the intelligence and ambition required for admission to the most selective schools, and their eventual graduation is almost a sure bet. Why wait four years to hire them, when they could be spending those years productively employed? And yet, once again, outside of professional sports, no employers choose to do this.

©Phil Date/iStock/Thinkstock

Clearly, there is strong evidence against the hypothesis that college serves merely as a filter, that employers are interested primarily in the raw material, not how that material has been molded or transformed by the college experience. It would be extremely hard to explain employer behavior if they are not rewarding traits and skills that students obtain or improve during their time at college. Given that fact, what are we to make of the economic data surrounding the college premium?

As any economist will tell you, the college premium is a measure of the value employers place on the skill (and consequent productivity) differential between college graduates and those with lower levels of education. Thus the college premium can be affected by a number of different factors. Traditional economic theory focuses on the relative supply of and demand for the skills represented by a college degree. For example, it is well understood that the relative demand for highly skilled labor increases as technology transforms the workplace. Technology tends to decrease the need for large numbers of unskilled laborers, but also requires more highly skilled workers to implement, operate, and maintain. Recent history has seen a large increase in technological innovation, and this in turn has increased the relative demand for skilled over unskilled workers.

The other side of the economic story is the relative supply of the skills in question: are there enough educated workers in the workforce to meet the demand, or too few or too many? In the U.S., the supply of college graduates has continually increased since the 1950s, though the rate of increase has not been constant. The increase was quite rapid during the late ’60s through the ’70s, but slowed down during the ’80s and ’90s. And as it turns out, the college premium actually decreased from 1970 to 1980, no doubt because the rapidly growing supply of college graduates outpaced any increase in demand. Since 1980, however, the college premium has steadily grown, thanks to a combination of increasing wages of college graduates and decreasing wages of those with only high school degrees.14 From 1980 to the present, the college premium in the U.S. has almost doubled.

There is another factor, besides the aggregate supply and demand for skilled labor, that can affect the college premium. Remember that the college premium measures the relative wages of college and high school graduates. But of course, employer demand is for workforce skills, not diplomas. As we said earlier, the premium is a measure of the value employers place on the differential skills of these two groups of workers. But that differential could change. For example, suppose there came a point where there were no differences in the skills of a college graduate and a high school graduate. Very quickly, the college premium would trend toward zero. There would still be differential demand for workers with different skill levels, but if the college degree no longer indicated a higher level of skills, employers would not be willing to pay a premium for those who hold the degree.

This introduces a layer of complexity, but an extremely important one, to the college premium. After all, the relative skills of high school and college graduates—and hence the underlying value to employers—could change if there were significant changes in the educational effectiveness of either high schools or colleges. For example, if the average skills of students graduating from high school dropped while those of college graduates remained roughly the same, then we would expect the relative value of college graduates to increase, even though their absolute skill level remained the same. On the other hand, if the skills of college graduates dropped while those of high school graduates remained the same, we would see the college premium decline. The gap in skills is what matters, and if this grows or shrinks, so too will the college premium.

The central question raised by Arum and Roksa in Academically Adrift is whether U.S. colleges have in recent years become less effective in imparting important workplace skills to their graduates. To put this important question another way, has the skill differential between high school graduates (the “raw material” entering college) and college graduates (the output) decreased?

At first glance, it is hard to square such a decrease with the economic data, with the continued growth in the U.S. of the college premium. Other things equal, a decrease in the skill differential should result in a shrinking of the premium, not continued growth. But of course, other things are not equal: changes in either the supply of college graduates or the demand for their skills might disguise changes in the skill differential represented by a college degree.

Now as we mentioned earlier, the relative supply of college graduates in the U.S. has continually increased during the postwar period, including in recent decades. Other things equal, this would also lead to a decrease in the college premium, and so would accentuate, not counteract, a decline in the college skill differential. So the only potentially confounding factor is changing demand for skilled labor. In particular, if demand for skilled workers has increased enough, then desperate employers might pay more for college graduates even if there are more of them available and the incremental skills they each bring to the workplace have gone down.

This seems fairly unlikely, but is hard to rule out entirely without a direct gauge of changing U.S. demand for skilled labor, one that is independent of the college premium itself. But no such measure is available. We can, however, learn something by looking at international data. Since the workplace technology driving today’s demand for skilled workers is cheap and widely available in the developed world, it is reasonable to assume that there is approximate parity in the demand for a skilled workforce in other highly developed economies. So it is instructive to look at the college premiums paid in other developed countries.

Unfortunately, systems of secondary (high school) and tertiary (college) education vary a great deal in different developed countries. In some countries, such as the United Kingdom, secondary education goes further while the first college degree is more specialized than in the U.S. In other words, much of what is covered in the first year or so of college in the U.S. is already covered in secondary schools in the U.K. Conversely, college education in the U.K. roughly matches the last two or three years of a U.S. college degree. The overall target is approximately the same, but the secondary/tertiary division of labor is somewhat different.

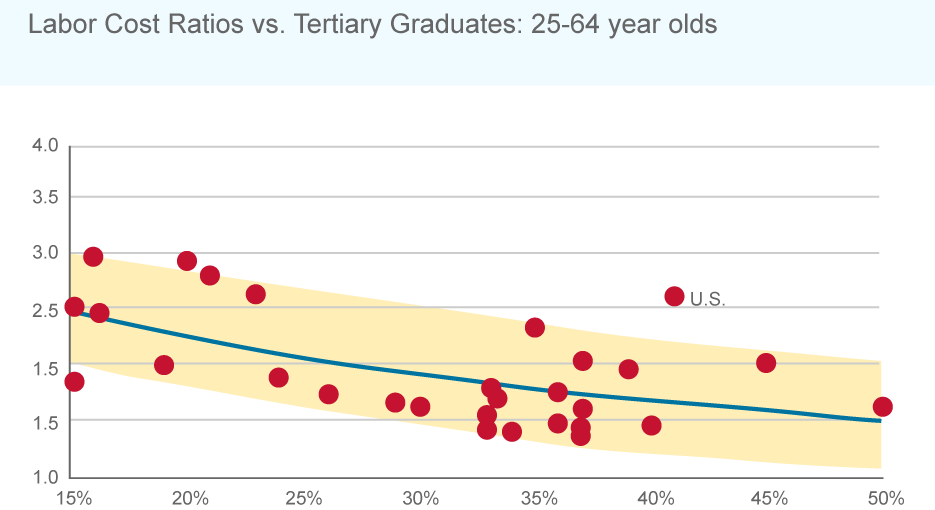

Because of these differences, there are fewer confounding variables if we look at the premium paid for college graduates compared to unskilled or minimally skilled workers, that is, individuals who did not complete their secondary (high school) education. It seems reasonable to assume that the skill levels of workers who have not completed high school, at least in economically developed countries, are fairly similar. Accordingly, let’s compare the labor cost of a college graduate to the labor cost of an unskilled or minimally skilled worker in the 34 countries that make up the Organization for Economic Cooperation and Development (OECD).15 The labor cost is the annual cost to the employer of hiring such a worker, including wages, benefits, and other mandatory costs. It is the best measure of what employers are willing to pay for different levels of skill.

The ratio of the average labor costs across all 34 countries is 1.8. In other words, on average employers are willing to pay 1.8 times as much for a college graduate as they are for an unskilled worker. In the U.S., unskilled workers cost employers an average of $35,700, very close to the overall OECD average of $37,900. But a college graduate costs an employer $92,900, 2.6 times the cost of an unskilled worker. There are only four OECD countries whose cost ratios equal or exceed 2.6: the Czech Republic (2.9), Hungary (2.9), Poland (2.8), and Slovenia (2.6). In each of these countries the relative supply of college graduates is among the lowest in the OECD, which accounts for their high ratios. By contrast, in countries where the supply of college graduates is similar to the U.S., the labor cost ratio is substantially lower than ours.16

If you graph the labor cost ratios of all the OECD countries against the percentage of college (tertiary) graduates, the resulting graph shows the economically predicted decrease in the cost ratio as the supply of graduates increases, with one notable exception: the United States is a clear outlier, with a substantially higher college premium than would be expected given its plentiful supply of graduates.17

This anomaly prompts the following observation by the OECD authors:

Since there is no apparent reason the demand for workplace skills in the U.S. should differ strikingly from all other OECD countries, this would suggest that the skill (and consequent productivity) differential is actually larger in the U.S. than in other countries.

It is very hard to square these data with Arum and Roksa’s conclusion that colleges in the U.S. are failing to impart important workplace skills to their graduates. Of course, these authors focus on more recent graduates, while the economic data we’ve examined so far deal with broad averages in the overall workforce. Would we see a difference if we narrowed our view to recent graduates?

The answer is no. If we look at the labor cost ratios among 25 to 34 year olds, the pattern remains roughly the same. In the U.S., the cost ratio between 25 to 34 year old college graduates and 25 to 34 year old unskilled workers is 2.3, while the OECD average is 1.5. Only two other OECD countries have ratios in this age cohort that are comparable to the U.S.: Hungary (2.5) and Luxembourg (2.3). Again, Hungary’s high ratio is influenced by a low percentage of 25 to 34 year old college graduates, but Luxembourg’s educational attainment rates are similar to ours. Thus among this cohort of workers, Luxembourg and the United States are the two standouts among OECD countries. There is no evidence here that the skill differential among recent graduates of American colleges and universities has declined in the least.

It turns out that for each additional year of college, the per capita GDP of a region increases a remarkable 17.4 percent.

Comparative data on the wage premium strongly suggest that college graduates in the U.S. are more productive relative to unskilled or minimally skilled workers than college graduates in other developed countries. Furthermore, the data suggest that this holds as much for recent graduates as for those who graduated years ago. This is consistent with two widely held views: first, that the U.S. system of primary education does not compare well with primary education in many other countries, and second, that U.S. higher education remains the best in the world.

Education and regional prosperity

So far, we’ve considered the economic effect of a college degree on the individual receiving the degree. But equally relevant is the effect of a college-educated workforce on a community or region’s economic productivity. A recent study by the Milken Institute tracked changes in educational attainment levels and economic output for 261 U.S. metropolitan areas for the years 1990, 2000, and 2010.19 Not surprisingly, they found that increases in a region’s average level of education are strongly correlated with the area’s gross domestic product per capita and real wages per worker. Specifically, adding one year to the average education level of the workers in a region is associated with a 10.5 percent increase in per capita GDP and an 8.4 percent increase in average wages.20

This is an impressive correlation, but the Milken authors found that the effect is even more striking when the added education is at the college level. In particular, they looked at the impact of an additional year of schooling among workers who already had at least a high school diploma. In other words, what happens if the average education of high school graduates in a region increases from, say, 13.5 years (one and a half years of college) to 14.5 years (two and a half years of college)?

It turns out that for each additional year of college, the per capita GDP of a region increases a remarkable 17.4 percent. Similarly, the average worker’s wages in the region are boosted 17.8 percent. By contrast, “an additional year of education for workers with just nine or 10 years of schooling has little effect on real GDP per capita or real wages per worker.”21

The Milken study found large variations in how much the per capita GDP of metropolitan areas in the U.S. changed from 1990 to 2010. And while they identify several factors that contribute to the variation, such as changes in the mix of industries in a region, they conclude that over 70 percent of the variation is explained by the change in education level of the region’s workforce.22 Increasing the level of schooling, particularly at the college level, was by far the dominant driver of a region’s productivity gains.

The focus of the Milken study on regional productivity gains sheds important light on the effectiveness of U.S. colleges and universities. While one might conceivably imagine that the salary premium for a baccalaureate degree is the result of something other than differential skills acquired in college—say, the network of influential contacts a graduate obtains—it is hard to see how anything other than differential skills could yield the kind of productivity gains analyzed by the Milken authors. If colleges are not increasing the workplace skills, that is, productivity, of the individuals they educate, then how can educating more members of a region’s workforce increase the productivity of the region? The productivity must come from a more productive workforce.

The Milken study shows that there is a real economic benefit to college education, not just a personal benefit to the individual receiving the degree. It also shows that American employers are acting quite rationally when they pay a premium for college-educated employees, since it allows them to capture the very real productivity gains that result from additional education. In a sense, it completes the picture whose contours were suggested by the wage data previously reviewed. College education produces a more productive worker, and that is why employers pay more for college graduates.

Arum and Roksa’s argument

All of the economic data point to the same conclusion: American colleges and universities are equipping their graduates—and equipping them remarkably well—with skills that enhance their productivity in the workplace. This is the backdrop against which we should assess the central argument of Academically Adrift. All too many readers, in both the popular media and academic circles, have uncritically accepted Arum and Roksa’s conclusions without the slightest hesitation. Like the verdict that college costs are driven by lavish dorms, the story seems almost too congenial to criticize.

Let’s look more carefully at Arum and Roksa’s methodology to see if we can understand how their conclusion can run so counter to the brute economic evidence. Arum and Roksa base their argument on results obtained by administering a standardized test, the Collegiate Learning Assessment (CLA), to a large population of students. The test was administered twice, once during the first semester of their freshman year, and then again during the last semester of their sophomore year. The population was drawn from a wide variety of colleges and universities, and by most measures closely resembles the overall student population of the U.S. Since the study follows the same set of students through the first three semesters of their college experience, it seems reasonable to assume that the difference in individual scores measures the impact of the intervening semesters on the students’ performance on this test.

The CLA is a standardized test intended to measure general skills in critical thinking, analytical reasoning, problem solving, and writing. It does not assess more specific subject-matter knowledge or other abilities that students may learn in their courses. Nonetheless, these general skills are widely considered among the most important workplace skills, and enhancing them is universally recognized as one of the primary goals of a college education. The CLA is a “constructed response” test, relying on essay-style responses rather than predetermined, multiple-choice answers. The test is scored by human graders applying rubrics designed to ensure consistency in scoring.

Given the economic evidence running counter to Arum and Roksa’s conclusion, there are two questions—or really, clusters of questions—that we need to consider. First, we need to ask what exactly the CLA measures. Arum and Roksa assume that it measures the key general skills most highly valued in today’s workplace. But this assumption might be mistaken for two sorts of reasons, which we will discuss in a moment. Second, we need to examine Arum and Roksa’s interpretation of their data, to see if the conclusions they draw from it are actually supported by the evidence. What does their data actually show, and is the situation as bleak as they make it out to be?

What does the CLA measure?

It is clear that the CLA measures something, if only the ability to perform well on this and similar types of tests. But does it measure the crucial skills and characteristics actually required in the workplace? This may not be the case for two reasons. First, the CLA may be an accurate measure of general reasoning, analytical, and communication skills, but the workplace may put a higher value on the specialized knowledge and skills that the CLA does not even attempt to measure—say, quantitative skills or subject specific knowledge. This could certainly explain the divergence between Arum and Roksa’s observations and the high value U.S. employers place on a college education. But if U.S. colleges are successfully producing graduates with the skills most highly valued by employers, even if not those measured by the CLA, it is hard to view Arum and Roksa’s results as indicating a serious problem. They are simply measuring the wrong thing.

No doubt specialized skills explain some of the disconnect between Arum and Roksa’s results and the economic data. Highly specialized knowledge that can only or most easily be obtained in college certainly accounts for some fraction of the college premium. But this cannot be the whole story, or even the dominant factor. For one thing, the specialized knowledge and skills that lead to the most highly paid professions require graduate or professional degrees, and the premium for a baccalaureate degree alone is already 74 percent. There are a small number of undergraduate majors that provide professional or quasi-professional training, but the college premium is by no means limited to these majors. Indeed, specialized skills cannot begin to explain the striking breadth of the college premium, the fact that it shows up to a greater or lesser extent in virtually every line of work, and regardless of the student’s major. This breadth must be due to more general skills and characteristics that are broadly acquired by college graduates.

The second possibility is that the CLA is aimed at the right general skills, but it may be a poor measure of those skills, at least as they manifest themselves in the workplace. It is easy to see how this might be the case. The CLA is a timed, standardized test, administered in an artificial setting quite unlike that encountered in an actual workplace. It would not be surprising if the outcomes from this test do not correlate well with an individual’s ability to analyze problems in the workplace, find optimal solutions, and successfully communicate or carry out those solutions. After all, the two tasks—standardized test taking and the average workplace challenge— could hardly be more dissimilar. They are performed on very different time-scales (one or two hours versus days or weeks); they involve different levels of motivation (a test whose results have no personal consequences compared to the highly salient consequences of salary and career advancement); and they permit entirely different strategies (such as seeking advice from others, trial and error, and other approaches precluded to the test taker). Even the communication skills measured by the CLA—basically the ability to write a formal memo—are of uncertain relevance to a workplace dominated by email and oral communication.

It would be a mistake to underestimate the importance of these differences. Take, for example, motivation. A recent study by researchers from the Educational Testing Service, one of the largest providers of standardized tests, showed that differences in motivation have a huge impact on students’ test scores.23 Using a test designed to measure college-level reasoning, quantitative, and communication skills, they found that motivated students, particularly those who stand to personally benefit from their own high performance, significantly outperformed students in a control group who were not similarly motivated. The researchers found that the effect on performance was as large as .68 standard deviations at one institution, the equivalent of a 25-percentile performance difference for the average student, and averaged .41 SD at the three institutions studied.24 By comparison, the average difference seen by Arum and Roksa between freshman and sophomore scores on the CLA was .18 SD, the equivalent of a 7-percentile difference.

The test used by the ETS researchers contained both a constructed response (essay) section and multiple-choice sections. Interestingly, they found that the impact of low motivation was significantly larger on the essay section of their test—the part most similar to the CLA—than on the multiple-choice sections. This stands to reason since, as they note, “it takes more effort and motivation for students to construct an essay than to select from provided choices.”25

©Dan Whobrey/iStock/Thinkstock

Whether or not this is the complete explanation, there is an important lesson to be learned from this study. Arum and Roksa’s application of the CLA, as with most uses of testing for program rather than individual assessment, is an example of low-stakes testing, that is, testing whose results make little or no material difference to the test takers themselves. Low-stakes testing is extremely vulnerable to the vagaries of student motivation. Unless measures are taken to ensure that the test takers are actually motivated to perform to the best of their abilities, the results are of questionable value.

The fundamental lesson might be put this way: low-stakes testing is not even an accurate measure of the individual’s capacity to perform well on that very test. We began this discussion by saying that the CLA measures, at the very least, the ability to perform well on tests of this sort, but in fact even that may be an unwarranted assumption.

Note that this problem is independent of the question of whether the test, even when taken by perfectly motivated subjects in ideal circumstances, accurately measures the real-world skills that we are interested in and that we actually teach. We have already described several reasons the CLA may do a poor job of measuring analytical and problem-solving skills as they manifest themselves in the actual workplace. But there are others. For example, there are many general traits and habits of mind that are resistant to accurate measurement by standardized test, and yet are highly relevant to workplace problem solving. Creativity, judgment, and the ability to work with others are obvious examples.

The more we appreciate the sheer complexity of skills, character traits, and habits of mind that affect an individual’s performance in the workplace, the more skeptical we should be that a standardized test can be devised that accurately measures that ability. And this is why a quality undergraduate education provides a wide range of subjects, taught in classes employing a diverse mix of instructional formats and assessment methods, along with ample opportunities for experiential learning and other co-curricular activities. It is no accident that the complexity of the workplace is reflected in the complexity of the college experience.

It takes an extreme leap of faith to think that a test like the CLA can accurately measure the general skills demanded in today’s workplace, or even a significant subset of those skills. Of course, the best measure of whether college graduates in the U.S. are acquiring the skills needed in the workplace is, and will always be, their actual performance in the workplace. And the best indicator of that remains the differential value employers place on college graduates over high school graduates, as reflected in the college premium.

If the CLA provided an important, independent measure of workplace skills, we would expect to find a significant wage differential between those who perform highly on the test and those who do not, once they enter the workforce. Unfortunately, there is not a lot of data on this key question, though there is some. Arum and Roksa performed a follow-up survey of the college graduates from their Academically Adrift study.26 Among other things, they found that those who had, as seniors, performed in the bottom quintile on the CLA were three times more likely to be unemployed than those from the top quintile (9.6 percent vs. 3.1 percent). But among those who were employed full time, they did not find the expected wage differential. The average salary of the top quintile ($35,097) was barely higher than the average salary of the bottom quintile ($35,000), and the average salary of the middle three quintiles ($34,741) was actually below that of the bottom group.

Since the follow-up survey was conducted just two years after most of the students graduated, it is possible that a wage differential will emerge as they proceed through their careers. But as it stands, this new data should give us pause. Arum and Roksa claim that U.S. colleges and universities are not equipping their graduates with the skills required in the modern workplace. But they base their argument on tenuous data from a test whose scores, according to their own follow-up survey, do not seem to predict earnings in the marketplace. Against the backdrop of overwhelming economic evidence to the contrary, it is hard to give this argument a great deal of credence.

It takes an extreme leap of faith to think that a test like the CLA can accurately measure the general skills demanded in today’s workplace.

What do the data really show?

There are many reasons to question whether the CLA is a good measure of the general skills expected of a college graduate. Still, the CLA has many supporters who consider it a state-of-the-art test of an extremely important set of reasoning and communication skills. As a logic professor who has taught these skills for over thirty years, I certainly concur about their importance, even though they may represent only a narrow sliver of the skills and characteristics that contribute to success in the workplace. It is essential to acknowledge two things, however. First, the CLA is by no means an accurate measure of even these limited skills. The dramatic effect of motivation on student performance alone shows that the results are anything but unerring. Second, it is abundantly clear that no standardized test can capture the full panoply of important characteristics that college aims to impart or improve. It is naïve to think that such a narrow and constrained measurement technique can adequately gauge the range of knowledge, skills, talents, dispositions, character traits, and habits of mind that contribute to workplace performance and that the college experience, at its best, molds and transforms.

Nonetheless, we can acknowledge both of these points but still wonder whether there are important lessons to be learned from Arum and Roksa’s data. Does it give us reason for concern about how well students are learning the specific skills the CLA targets? I think the answer, even to this more limited question, is no.

The first thing to realize is that the most widely reported claim made by Arum and Roksa—that 45 percent of the students made “no measurable gains in general skills”—involves a common statistical fallacy. Alexander Astin first pointed this out in an article in The Chronicle of Higher Education.27 Let me describe the problem in non-technical terms. Suppose we are interested in determining whether an individual student’s reasoning skills have improved between the two sittings of the CLA exam. We know that CLA scores in themselves are an imprecise measure of the underlying skills because of unavoidable sources of measurement error, such as the student’s mental or physical state on the day of the exam, imprecision in scoring the test, and so forth. So how do we know when a change in CLA score indicates a real change in ability?

If it is important to avoid wrongly declaring improvement when none has actually occurred—what is known as a false positive or Type I error—we need to require that the student’s later score exceed the earlier score by some margin of error. Using a larger margin of error gives us more confidence that the change in score is not simply a fluke but indicates a genuine improvement in skill. But the larger the margin of error we choose, the more false negatives or Type II errors we will incur, that is, students whose skills have actually improved even though their scores did not meet our more stringent requirement. That is the unavoidable tradeoff: aiming for fewer false positives inevitably produces more false negatives.

So where does the widely quoted 45 percent figure come from? Arum and Roksa have settled on a particular margin of error for measuring improvement (corresponding to a “95 percent confidence level”), and found that 55 percent of the students tested demonstrate improved levels of skill. That is, each of these student’s test scores increased more than the chosen margin of error, giving us confidence that the change in score was not caused by measurement error, but was instead due to a genuine improvement in skill.

But what can we say about the other 45 percent? Can we say with equal confidence that their skills did not improve between the two sittings? Absolutely not. In fact, the very technique that gives us confidence of improvement on the part of the 55 percent also ensures that the 45 percent includes more false negatives, students whose skills actually improved but who were excluded by our more stringent requirement. All we can say about these students is that their scores, for whatever reason, did not increase beyond the chosen margin of error. This might be due to no improvement in the skills in question, but might also be due to any number of measurement errors—for example, scoring imprecision or, to echo our previous discussion, decreased motivation on the part of the student.

What do Arum and Roksa have to say about the possibility of false negatives, students whose skills improved though their scores fell short? Here is their casual dismissal of the problem:

The problem with this response is that it reveals a fundamental confusion. They are in effect saying: sure, there may be false negatives, but it is just as likely that there are false positives. But that’s simply wrong: the whole point of requiring a margin of error is to diminish the chance of false positives, though in doing so we necessarily incur more false negatives. The two sides do not somehow balance out. Thinking that they do is a blatant fallacy.

In fact, Arum and Roksa’s nonchalant dismissal of this concern would actually have been more appropriate if they had not employed any margin of error, but had simply assumed improved skills for all those whose raw score increased between the two sittings. Of course, the percent whose skills they reported as “improved” would then have been significantly higher than 55 percent, and the percent that did not “improve” would be correspondingly lower. Since Arum and Roksa do not provide their raw data, we do not know precisely how much the numbers would change.

In any event, the sensational and oft-repeated claim that 45 percent of the students in the study showed no learning gains is simply a mistake.

So looking at Arum and Roksa’s results, what can we legitimately say about the student learning? There are three significant facts to keep in mind. First, it is important to remember that the two administrations of the test were separated by only three semesters of college, about a year and a half. Anyone who has taught either writing or critical thinking realizes that these are skills that are slowly improved through practice and repetition, not ones that are acquired easily or quickly. Second, we should bear in mind that Arum and Roksa’s data come from a low-stakes test setting and so are subject to large motivational effects. Given predictable declines in motivation between the two administrations of the test, the study is, as the ETS authors say, “likely an underestimation of students’ true college learning.”29 The motivational effects inherent in the study design already predispose the results toward false negatives (students whose improvement is masked by decreased motivation) and away from false positives (students whose test performance improved in spite of no change in underlying skill). Finally, the fact that we additionally require a statistical margin of error before declaring improvement biases the results even further away from false positives, while incurring the unavoidable risk of yet more false negatives.

Given these facts, what should our prior expectations be about the study’s results? Speaking for myself, I would not have been surprised if the study failed to detect any learning improvement between the two administrations of the test. The fact that 55 percent of the students involved in the study nonetheless showed improvement beyond the chosen margin of error is actually remarkable. Far from being an indictment of our students and our colleges, it is a surprising and encouraging result.

The same can be said for the change in average score. Arum and Roksa report that the average score on the exam improved by “only” .18 standard deviations. They go on to explain:

Arum and Roksa present this as a negative result. This is a rather puzzling reaction. Indeed, in another context, we could imagine the paragraph above appearing in an advertisement promoting an SAT test prep service. When we add to that the fact that the study design likely underestimates the students’ learning, it is hard to read into this data a legitimate cause for concern.

Once we strip away Arum and Roksa’s rhetoric of crisis and look at the actual data they present, it takes on an entirely different cast. Using a methodology that is biased toward understating student progress, they nonetheless see evidence of a reassuring degree of learning across a very broad base of students attending a wide variety of colleges and universities. They see this progress using a test that targets a set of abstract reasoning and communication skills widely known to be among the most difficult to teach, and they see the improvement after only three semesters of the students’ college experience.

This is not evidence of a system that is academically adrift, but evidence entirely consistent with what the economic data tell us: graduates produced by American colleges and universities display a significant skill differential that employers reward with the most substantial wage premium offered in the economically developed world.

Conclusion

The United States has the most complex and variegated system of higher education in the world. We have colleges where the dominant form of instruction is the large lecture and colleges whose largest class enrolls ten students. We have schools that deliver instruction primarily through hands-on internships and others that are primarily online. We have commuter colleges geared for the working adult and residential colleges tailored for the full-time student. We have schools that are highly selective, while others admit all comers. We have public institutions run by the states, ranging from local community colleges to world-renowned research universities. We have private non-profit institutions, including small liberal arts colleges, polytechnics and conservatories, religiously affiliated colleges and seminaries, and large, full-service universities. And we have a growing for-profit sector: from long-established technical institutes, to new, predominantly online universities.

©tassapon/iStock/Thinkstock

I titled this essay, “Are our colleges and universities failing us?” At this point, a reader might expect my answer to be no, not really. But this answer would be as simplistic as those I’ve criticized. Indeed, the very complexity and heterogeneity of the U.S. system means that it defies broad generalization along almost any dimension.

Consider, for example, the issue of college cost. Suppose we ask what the primary driver of tuition increases has been during the past decade. In fact, although tuition has increased faster than inflation in every sector, there is no single reason why. For example, at one end of the spectrum, community colleges, the expenditures on education and related activities in fact declined almost a thousand dollars per student between 2000 and 2010. These colleges have not only contained expenses but reduced them. Yet the cost to students still increased faster than inflation, because state appropriations to the colleges declined even more than expenses.31 The reduced student subsidy provided by the states more than counteracted increased efficiency on the part of the colleges.

In contrast, at private colleges and universities the situation was effectively reversed. These schools spent substantially more on education and related expenses, yet their net tuition costs—that is, the average tuition a student pays after financial aid—remained almost exactly the same after adjusting for inflation.32 Indeed at many private institutions, the net cost of attendance actually declined, thanks to much more generous financial aid programs. Here, while the published tuition increased, the subsidies provided by college endowments more than made up for that increase.

It is even harder to broadly generalize about the educational effectiveness of such an extraordinary range of institutions. There are doubtless institutions in every sector—public, private, and for-profit—that fail to deliver acceptable educational outcomes, whose graduates are not well prepared for the jobs available in today’s marketplace. But on the extent of the problem, the economic data speaks volumes: There is clearly no systemic or widespread problem with the educational effectiveness of U.S. colleges and universities. The only reliable measure of how prepared college graduates are for the workforce is how they actually perform on the job. And the best, broad-based measure of that is the college premium: how much employers are willing to pay for the incremental skills the college graduate brings to the job. There is no evidence that the skill gap between high school and college graduates in the U.S. has narrowed. On the contrary, the data suggest precisely the reverse.

The recent recession and virtually jobless recovery have taken a toll on wages in the U.S. This, along with the pervasive sense of crisis in higher education, has led to many popular articles focused on college graduates who find themselves unemployed or underemployed. These anecdote-driven stories often conclude by questioning whether college remains a good investment. They tend to ignore the (easily available) comparative data concerning wages and employment rates for young people without a four-year college degree. The truth is that while wages for recent college graduates have indeed declined about 5 percent, wages for high school and associate degree holders have declined 10 and 12 percent, respectively. Similarly, the proportion of recent college graduates who successfully transitioned into employment barely changed during the recession, while the rates for high school and associate degree holders dropped by 8 and 10 percent.33 Again, the actual data do not show that the value of the bachelor’s degree has recently declined, but rather that the value is more than holding its own, despite difficult economic times.

But none of this is cause for complacency. Our system of higher education is not without serious problems. But again, we need to treat the real problems, not imagined ones. At the top of the list is cost and accessibility: we need to find some way to bend the cost curve in higher education without making large sacrifices in education quality. This is not an easy problem to solve, because the cost crisis is not driven by lavish student amenities, high administrative salaries, or other popular diagnoses, but by far more fundamental economic forces.

Of equal concern are college completion rates—the proportion of students who begin college and go on to graduate with a bachelor’s degree. Nationwide, less than 60 percent of students who enter a four-year college successfully complete a degree after six years. The other 40 percent spend considerable time and money, both their own and the taxpayers, pursuing a college education, but then end up with little to show for it. This is the real wasteful spending in higher education, and unless we address it, there is little hope we can substantially increase the proportion of college-educated employees in the workforce.

Addressing these real problems should be the focus of our national education policy, regional accreditation boards, and university administrations. Concerns about whether those who successfully graduate are adequately prepared for today’s job market, or whether they have achieved appropriate “learning outcomes,” are largely a distraction from the actual problems of higher education in America. There is overwhelming evidence—evidence from the job market itself—that our colleges and universities continue to do well on that particular score.

2 For an excellent and careful analysis of the rising college costs, see Why Does College Cost So Much? by Robert Archibald and David Feldman, Oxford University Press, 2011.

3 See Archibald and Feldman (2011) for a discussion of these forces. They argue that there are two main reasons costs for these services increase faster than the consumer price index (CPI). First, industries that show significant efficiency gains (such as manufacturing) tend to pull down CPI, and so those that do not show comparable gains (in this case, service industries) become more expensive relative to CPI. Second, as wages for highly educated workers increase relative to less educated workers (largely due to increases in technology), the cost of services requiring highly educated providers further outpaces overall inflation. These two factors explain a large part of the increase in college costs, though there are clearly other factors at work as well, including significant increases in instructional and support services provided on most campuses today.

4 National Postsecondary Student Aid Study (NPSAS), 2007-2008, cumulative borrowing by sector, http://nces.ed.gov/surveys/npsas/xls/B9_CumDebtLumpSectorBA08-09.xls.

5 According to a recent Moody’s investor report, in just the last three years, the proportion of families with any college savings dropped from 60 percent to 50 percent, and those who saved set aside an average of only $11,781, down from $21,615 three years ago. (Moody’s Investor Service, March 12, 2013.)

6 Academically Adrift: Limited Learning on College Campuses, Richard Arum and Josipa Roksa, Chicago (2011), p. 36.

7 “Skills, Tasks and Technologies: Implications for Employment and Earnings,” Daron Acemoglu and David Autor, NBER Working Paper No. 16082 (2010), p. 7.

8 The College Payoff: Education, Occupations, Lifetime Earnings, Anthony P. Carnevale, Stephen J. Rose, and Ban Cheah, Georgetown University Center on Education and the Workforce (2011), p. 4.

9 The Economic Benefit of Postsecondary Degrees: A State and National Level Analysis, Katie Zaback, Andy Carlson, and Matt Crellin, State Higher Education Officers Association (2012).

10 Among standard baccalaureate majors, the lowest premium is 27 percent for social and behavioral science majors in New Hampshire and South Dakota.

11 “College Grads in Baseball a Rare Breed,” Jon Paul Morosi, Fox Sports, May 18, 2012, http://msn.foxsports.com/mlb/story/curtis-granderson-college-grads-in-baseball- a-rare-breed-051712.

12 “N.B.A. Players Make Their Way Back to College,” Jonathan Abrams, The New York Times, October 5, 2009, http://www.nytimes.com/2009/10/06/sports/basketball/06nba. html.

13 Carnavale, et al., p. 3. Note that these are all, by definition, individuals who are in occupations that do not require a college degree. These occupations tend to be lower paying and also have smaller college premiums. For example, security guards with a baccalaureate degree earn about 45 percent more than those with only a high school degree, while those with some college but no degree earn about 20 percent more than those with only a high school degree. This explains why the premium for some college but no degree is only slightly more than a quarter of the premium for a baccalaureate degree.

14 See Acemoglu and Autor (2010), Figures 1-4.

15 The data in the following discussion are drawn from Education at a Glance, 2011, OECD, Tables A10.1, A1.3a, and A10.2. For the purpose of this discussion, “college graduate” includes all graduates of tertiary education programs, including those we would call technical colleges, and “unskilled worker” includes anyone who has not completed their country’s counterpart of our 12-year high school education. In some countries, they may have completed vocational training programs, and so be somewhat more skilled than their U.S. counterparts. The OECD countries are: Australia, Austria, Belgium, Canada, Chile, Czech Republic, Denmark, Estonia, Finland, France, Germany, Greece, Hungary, Iceland, Ireland, Israel, Italy, Japan, (South) Korea, Luxembourg, Mexico, Netherlands, New Zealand, Norway, Poland, Portugal, Slovak Republic, Slovenia, Spain, Sweden, Switzerland, Turkey, United Kingdom and United States.

16 These countries and their labor cost ratios are: Israel (2.0), South Korea (2.0), United Kingdom (2.0), Australia (1.6), Canada (1.6), New Zealand (1.5), Finland (1.4) and Norway (1.4).

17 See Education at a Glance, 2011, Chart A10.3, which graphs data for 45-54 year-old workers. I have graphed the data for all workers from 25-64 years old, which is more inclusive and demonstrates the same point. If we graph the labor cost ratio of college graduates to high school graduates, rather than unskilled workers, the outcome is similar, although the ratios are obviously smaller. Here, the labor cost ratio in the U.S. is 1.7. The closest country with similar college attainment levels is Israel, at 1.6, and most fall well below 1.5.

18 Education at a Glance, 2011, p. 179, emphasis added.

19 A Matter of Degrees: The Effect of Educational Attainment on Regional Economic Prosperity, Ross C. DeVol, I-Ling Shen, Armen Bedroussian, and Nan Zhang, Milken Institute (2013).

20 The regional returns estimated by the Milken study are consistent with other recent studies. For example, Turner, et al., study the economic return to U.S. states as the average education level in the state increases. They estimate that “the return to a year of schooling for the average individual in a state ranges from 11% to 15%.” See “Education and Income in the States of the United States: 1840-2000,” Chad Turner, Robert Tamura, Sean Mulholland and Scott Baier, Journal of Economic Growth (2007).

21 A Matter of Degrees, p. 10.

22 A Matter of Degrees, p. 9.

23 “Measuring Learning Outcomes in Higher Education: Motivation Matters,” Ou Lydia Liu, Brent Bridgeman, and Rachel M. Adler, Educational Researcher (2012).

24 Liu, Bridgeman, and Adler, p. 356.

25 Liu, Bridgeman, and Adler, p. 360.

26 Documenting Uncertain Times: Postgraduate Transitions of the Academically Adrift Cohort, Richard Arum, Esther Cho, Jeannie Kim, and Josipa Roksa, New York: Social Science Research Council (2012).

27 “In ‘Academically Adrift,’ Data Don’t Back Up Sweeping Claim,” Alexander W. Astin, The Chronicle of Higher Education, February 14, 2011.

28 Arum and Roksa (2011), p. 219.

29 Liu, Bridgeman, and Adler (2012), p. 360.

30 Arum and Roksa (2011), p. 35.

31 Spending: Where Does the Money Go? A Delta Data Update 2000-2010, Delta Cost Project, American Institutes for Research, 2012, p. 10.

32 Trends in College Pricing 2012, The College Board,

33 How Much Protection Does a College Degree Afford?: The Impact of the Recession on Recent College Graduates, Economic Mobility Project, The Pew Charitable Trusts, 2013.